In this post, I would like to share my observations of the most common mistakes teams and organizations make in their day-to-day work with IT systems. This list is based on my long-years experience of working with different customers and international teams so that you can consider it as independent of location or industry.

Mistake #1: No ITSM

The number one mistake is not keeping track of your work and not having a specific system for this in place. I still encounter a lot of cases, especially among small businesses, when all requests for technical support are stored as email correspondence or as stickers on monitors. A more advanced option is to have a shared Excel spreadsheet where technicians log their tasks or at least try to. Apart from the undeniable fact that these approaches do not work well at scale, they also introduce a lot of mess, conflicting tasks and unwanted downtimes.

In modern IT Operations, working without ITSM for Ops is like working without a version source control system for Devs. Even if you are the only one guy or gal in operations, having the records of all support requests, changes to system configuration, other tasks you worked on will save you a lot of time and effort in multiple cases. For example, if some IT system goes down, the first place to start your investigation is to look through the recent changes that might have affected that system. In almost 80% of downtimes, a system can be brought back to normal by simply reverting the last change. Another typical case is communication with your customers, either external or internal. Customers who can easily see and track the current state of their requests for supports are less likely to call you every hour or escalate in any other way on their cases.

One more important aspect of having good ITSM is that it becomes a reliable and trusted source of information about all your IT systems. When you can query your ITSM about the list of incidents affected individual components of your infrastructure or the history of changes that impacted them, you can identify problem points based on the factual data and not only on your gut feelings. Apart from that, if you need to onboard a new member of your operational team or perform knowledge transfer, it can be accomplished much faster because, after the introduction to your ITSM and its key principles, the major part of newcomer’s learning can be self-paced.

Mistake #2: No clear processes

The second most common and really critical mistake is not establishing and enforcing unified operational processes. Business demands some level of predictability and reliability from the IT systems it depends on. Usually, these requirements are documented in the form of Service Level Agreements (SLA) between an IT department, as a service provider, and other departments, as customers or service consumers. If each request for support in the IT department is treated and handled differently, it is almost impossible to satisfy those requirements.

If you put yourself in customer shoes, the bare minimum you expect from any service provider except the cost is how long it takes to have feedback on your request. Would you go to Starbucks if each time you visit it, the wait time for your cup of coffee varies from 5 minutes to 5 hours or 5 days? You can call me insane, but that is how customers often see their experience when turning to IT support for help. Instead of providing them with clear and consistent expectations, it takes a completely different amount of time to fulfill the same type of request on each occasion.

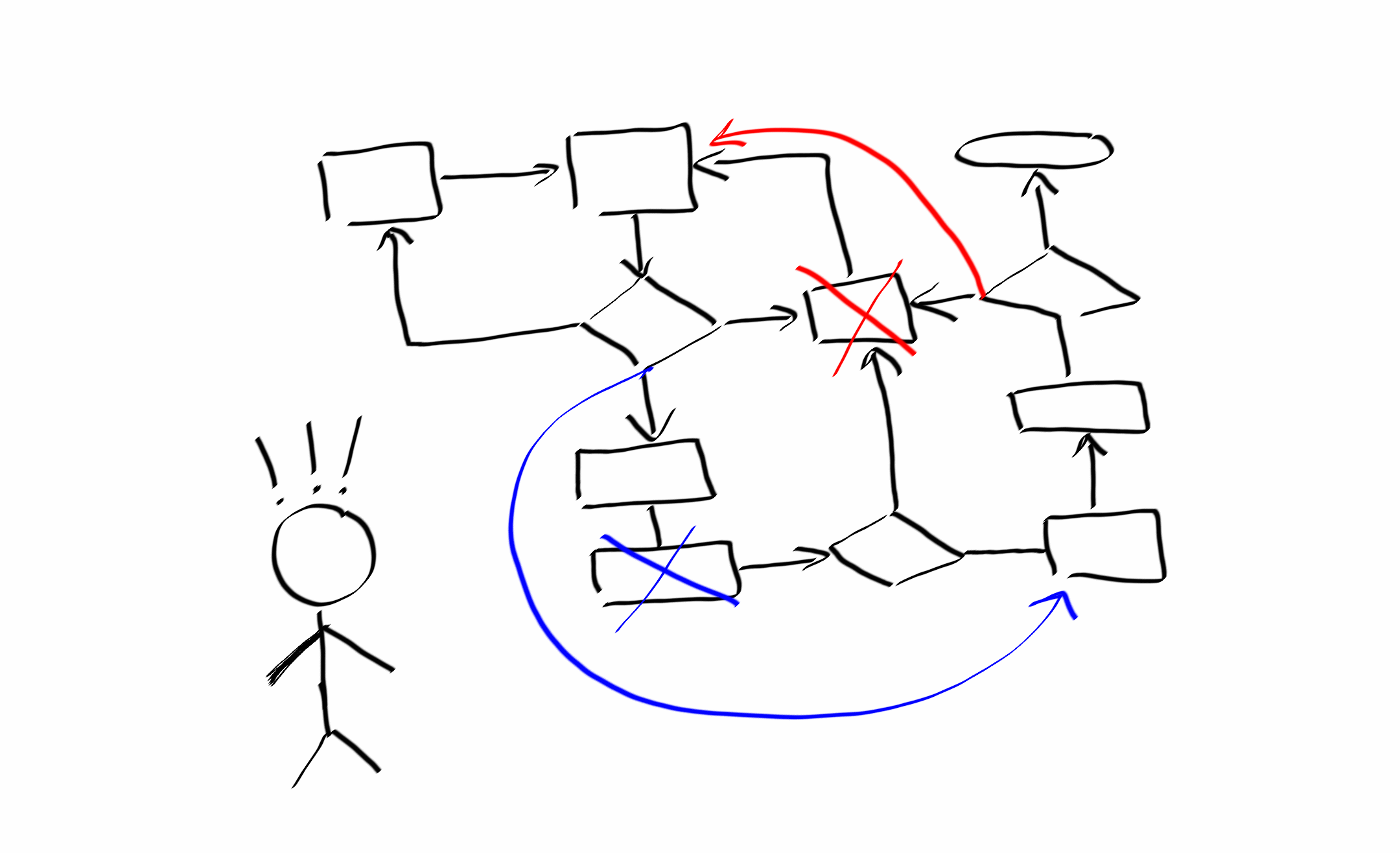

Another edge case of the same problem is having so complex operational framework that it becomes hard to follow it. So, instead of focusing on the value a process brings to work, people introduce additional steps or routines only for the sake of the process itself. For instance, when I see a diagram of the incident management process with 50+ steps, I definitely know that this organization has severe issues in that area of IT Operations. If you cannot draw your process on a single A4 paper or describe it less than in 1 minute, I doubt you can follow it on an everyday basis.

Mistake #3: No monitoring

I have already written a whole article on Top-5 mistakes in IT monitoring, but here I would like to address the question from a broader, i.e., an operational point of view.

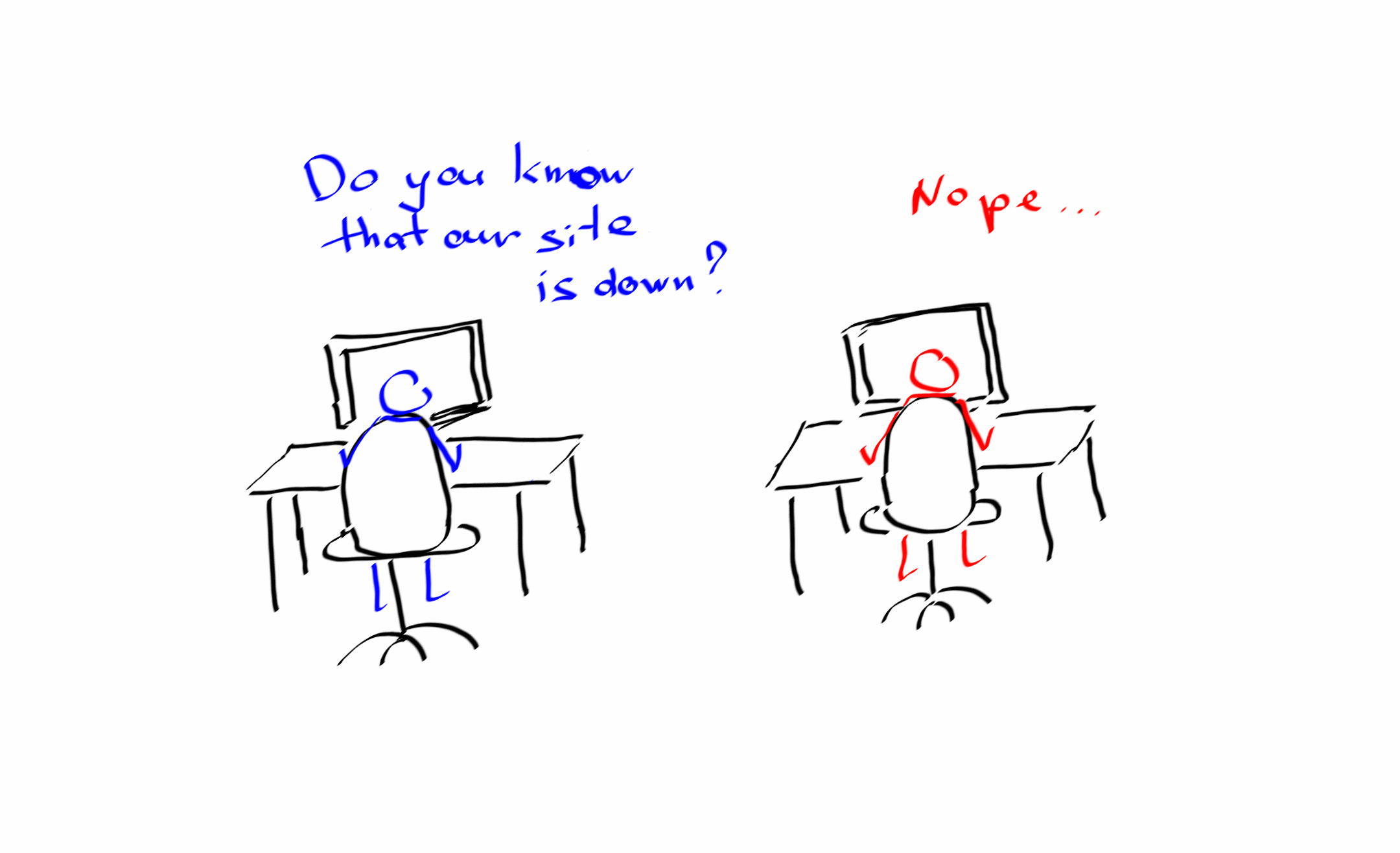

The worst case of insufficient monitoring is when it is your customers who notify you first about the issues in your systems. It literally means that you know nothing about the system state and cannot support it effectively and reliably. You basically cannot be trusted as a service provider.

If you do not monitor the health of your IT systems continuously, you have no means to ensure that they function according to business requirements. System support becomes a full mess and you entrust your future to a matter of chance. To avoid this, organizations usually adopt different tools and methods to monitor their infrastructure and applications. Unfortunately, many of them forget about the need to establish a unified monitoring process and end up just with a bunch of incompatible tools, which use different notification methods and do not provide a full picture upon incidents.

As a best practice, each IT system which is going to production state must be put on monitoring. If system configuration changes, these changes must be reflected in the monitoring setup too. Monitoring is more about the process than a single event. You cannot configure your monitoring once and forever. You have to verify and adjust it on each change to the monitored systems.

Mistake #4: No documentation

Ah, the most unpleasant part of engineering work, writing these stupid long reads that nobody is going to look into. That is how many technical people see this when they are tasked with writing the documentation for the system they created or configured. On the contrary, the first thing an engineer asks for when starts working with a new system or in a new project is whether some docs can shed light on the internals of that stuff. Don’t you find this behavior a little bit confusing?

The problem is that writing and keeping documentation in an up-to-date state requires a lot of effort and discipline from a team and its members. Often, due to pressure or deadlines or lack of time, this task is abandoned in favor of “more important work.” Piece by piece, this creates what is called a technical debt because good and explanatory documentation is a critical part of any computer system, either application or infrastructure.

Consider an example from the bus factor point of view, which I described in my Notes on “The Phoenix Project.” One of your engineers who supports a critical computer system decided to leave for some reason. He or she is only the one person who knew everything about the system, and there is no such second (another engineer) or third (documentation) party that shares that knowledge. How are you going to support that critical system? Sure, you can try to persuade this engineer to stay while you are looking for a replacement or ask him or her to write some manuals before leaving. In real life, neither of the mentioned is workable: the search for a new engineer might take up to a few months and such “exit documentation” is usually very limited and poor in quality. So, you got into trouble.

Like in programming, the not documenting systems you operate will eventually force you to pay that debt.

Mistake #5: No review

Due to the complexity of computer systems, operating them might require a lot of different teams to interact and collaborate. The more people participate in some process, the harder it becomes to gather all the pieces of that puzzle and to evaluate the process.

The key thing, however, is that due to the changes inside an organization or outside of it, e.g., on the market, old approaches of getting the job done stop working, or become ineffective, or worse – start hurting instead of helping. These changes are usually hard to observe and notice especially if you are just doing your part of the work and don’t see a whole picture.

One of the major factors that we, as a human species, evolved into what we are today, is an adaptation. If you do not adapt to changing conditions, you will end up as dinosaurs did a long time ago. On the other hand, adaptation requires a lot of mental energy to change your perception and, consecutively, the behavior. Some of our primordial parts are very resistant to all “unnecessary” energy expenditures, and that is why people sometimes can be very reluctant to any changes in their work environment.

To overcome this reluctance challenge, you should review and evaluate all parts of your operation on a regularly scheduled basis. These reviews must target not only processes and systems but, most importantly, the people operating them. For many of us, it is hard to admit that in most cases, this is we who fucked up, and that is we who should change because neither computer systems or processes can do so on their own.

Member discussion: